Apache Hadoop Framework forms the kernel of an operating system for big data permitting users to share resources, managing permissions and allocations.

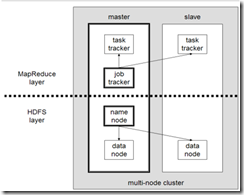

Map Reduce Layer :

- The Task Tracker on each node spawns

off a separate Java Virtual Machine process to prevent the Task Tracker itself from failing if the running job crashes the JVM.

off a separate Java Virtual Machine process to prevent the Task Tracker itself from failing if the running job crashes the JVM. - The Job Tracker pushes work out to available Task Tracker nodes in the cluster, striving to keep the work as close to the data as possible.

Crux of MapReduce Architecture:

- Maps are the individual tasks that transform input records into intermediate records. The transformed intermediate records do not need to be of the same type as the input records. A given input pair may map to zero or many output pairs.

- Reducer reduces a set of intermediate values which share a key to a smaller set of values.

HDFS Layer :

- Namenode is the single point for storage and management of metadata, this can be a bottleneck for supporting a huge number of files, especially a large number of small files.

- Data Node talk to each other to rebalance data, to move copies around, and to keep the replication of data high.